If you believe the hype, Google and co. say that 30% or more of their code is AI generated. I would argue, while there may be lots of autocompleted code, in many larger engineering teams, the majority of developers may risk being dragged along or left behind and not on their terms. While I do not call AI Agents the death of the programmer, I do think a fundamental paradigm shift is underway.

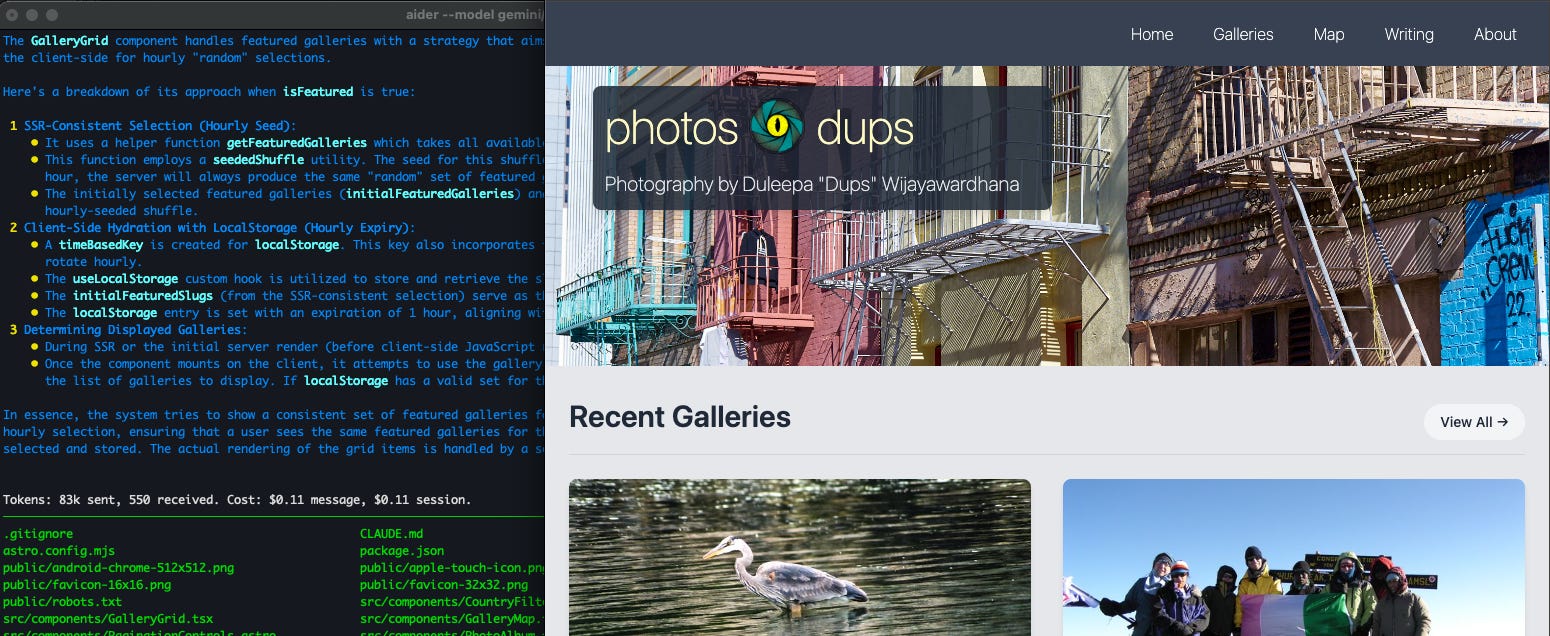

Last week, I decided that for my next iteration of my personal sites dups.ca and photos.dups.ca, I would build and deploy only using Claude Code or Aider with Gemini 2.5 Pro. The finished result was, for me, not unsurprising, but nonetheless amazing. In a matter of hours from start to finish, I had two sites built in Astro and React, and deployed to Cloudflare Pages; I wrote almost no actual code. In the process, I had experimented with and learned a fair bit about Astro, a technology that we are thinking to adopt at work but I had never tried, and I had shortened my path to improving my online presence by days instead of weeks.

I talked to various people about my personal experience as well as the non-trivial work that my small team at work is focusing on using similar techniques. I received some interesting comments and reactions.

Those who are only tangentially involved in building applications were impressed. Technology leaders who understand that getting to an outcome efficiently with some measure of quality nodded their heads; this is the dream that they see for the future for their organizations. From specific developers and programmers across a few different organizations, I received a mix of comments that ran such a range that I felt the rubber was actually not hitting the road when it came to AI adoption in mid-to-larger enterprises.

For some folks, my experiences and results were similar to the feedback from my own team: “Yes, of course, that’s what I’m doing every day. There’s lots more to learn and experiment.” A few folks I know made comments around me “vibe coding”— and one or two commented “but it’s totally not useful for important tasks, Dups; sure, for a POC or something small.” Some back-end devs said: “Well, that’s just front-end code.” Back-end coders have always believed that the first coders to be replaced should be front-end coders. Finally from both back-end and front-end coders: “I’m glad that worked for you, but I don’t really care. I tried it, and I didn’t like the results.”

From my experience, while autocompleted code does make it into a remarkable amount of our codebases no matter the company, in general, most larger engineering teams have not embraced AI to the level that I as a technology leader would believe or hope it to be. And that means we are unable as companies or as individuals to unlock its full potential even if the hype of AI abilities is currently running faster than the reality. I would argue that the hype of AI deeply infusing engineering teams seems to also be running faster than the reality.

My belief is that in larger than startup-sized engineering teams, this is a result of issues at various levels: from management being unable to provide the correct tools with security and compliance all the way to reluctance or indifference by specific individuals. Depending on where those specific individuals may sit in your team or organization, the depth of the adoption may very well be lower than what any technology leader is expecting or perhaps even reporting. While I have yet to personally come across any such person, it would not be unexpected that there may even be engineers actively discouraging Agentic AI tools or flows.

If there is one thing any developer or leader takes away from this post, I hope it is the following: if you are not experimenting, understanding, learning, and spending time on Agentic AI practices in software engineering, within a year or two, you will be dragged along and/or left behind. You want to control how that happens on your terms. In my opinion, it is absolutely not the case that we will suddenly hire fewer coders. However, the kind of person and coder we are looking to employ will be going through a paradigm shift if that hasn’t already started.

The Human Element: Challenges and Mindset Shifts

One of the problems I see across a number of job types and companies is the tendency to initially try to adapt tools to the way we’ve always done things. The comment, “I tried it, but it didn’t work as I expected,” seems to stem from this problem. Over the last couple of decades, Google has wired our brains such that we use our AI tools and assistants as we would with Search: ask a question as you would anywhere on a web search bar, get the top result, and move on.

With true Agentic AI and some of the newer coding tools, you need to go beyond that paradigm. You need to start thinking about different ways to get these tools to work for you. Imagine you had an assistant, a human one, that would not complain about being asked to do some rather annoying, boring tasks but was competent in so many fields. How would you treat that person and the things they might come up with? Shane Parrish, in “Clear Thinking,” said one tactic he had was to continuously ask, “Is this the best you can do?” Well, with an AI assistant, you can absolutely go to that length ad infinitumwithout them quitting. That means going beyond surface-level interrogation and shallow thinking, analysis and action. Here would also be my time to give a good reminder that, despite lots of grounding, these things do tend to hallucinate a fair bit.

Another common comment: “But they don’t produce the best code. I pride myself on the best-looking code or the best-structured code. I don’t want to have to go fix it; I might as well write it.”

This comment is usually coupled with a belief that back-end code is not something that these AI coding assistants are good at. While the physical act of coding might give someone joy, I would argue most people should try to switch their thinking and figure out how to ground these AI assistants to create code in styles and structures that give you joy. I can genuinely say, depending on the chosen language, these AI assistants can successfully go beyond front-end code with some effort and clever prompting.

A comment from at least one tech lead: “We have a project to get done; I don’t have time for the team to experiment with all that.”

There’s the adage about the lumberjack who spends time sharpening their axe before cutting down trees instead of wasting time with a dull axe. In larger projects where a team can spend, for example, three months coding a feature, you can bet that the team will produce a large amount of code. You can also bet that it will take an equally significant, and sometimes greater, amount of time to modify and/or debug when the feature is finally released and real-world use cases demand action. Such time horizons can result in understandable reluctance to abandon tried and true practices rather than face the unknown so that one can fulfill company or product manager goals.

I am likely the leader that wants that goal fulfilled; however, I would argue that this would be the time for an Engineering Manager or Product Manager or Tech Lead to push back. Maybe they can encourage their team to take a week or month to experiment with different tools, to arm and teach the team different paradigms, and to experiment and rework plans and consider how AI can help them succeed. I would rather a leader spend a month attempting to shorten iteration cycle times than give me a specific day when a feature is done. We all know that no matter your ability to estimate most teams tend to miss the mark and long iteration cycles make everything doubly worse. Remember, in theory, what AI builds can be used to maintain, change, and iterate.

I do understand fear. When the media is throwing out claims of job losses and bad job markets, humans tend to be slower at embracing change and taking risks. Fear is often couched in many seemingly plausible reasons which fall apart on closer inspection. I guarantee that a good programmer is worth far more than the sum of the code that they have written or will write!

Taming the Beast: Strategies for Larger Codebases

“Well, it’s good for boilerplate coding, smaller codebases, or startups. Forget it, Dups, have you seen how much code we have?” —This seems to be the fail-safe argument I’ve heard more often than not in any number of teams and even in non-programming settings.

A junior developer a long time ago asked me, “What would make me a senior, expert, or lead developer?” A real expert or lead developer is, amongst other attributes, one who can think outside the box, one who understands that rules are meant to be broken and when to break them, one who is creative and imaginative, and especially one who understands that there’s always a solution; you just need to think deeply and keep asking why. A senior engineer surrounds their knowledge with wisdom and context, similar indeed to how I believe an AI can deal with a larger codebase.

The same argument thrown out about AIs not being able to understand the full context of how a particular function may be used can be leveled at any senior or other developer who accidentally “fixes” a piece of code, only to create a vulnerability due to their not understanding exactly how it’s used.

Here are some simple ideas to consider:

Adapt a mono-repo/monolith into a better-organized system. You might even consider using an AI to help you do that reorganization.

Find good ownership strategies in that codebase where possible—this is a human thing but often neglected. You can even instruct AI assistants to find and get help from owners.

Create AI agents that are specific owners of specific parts of a codebase, so that the agent is especially well-grounded, much like you would expect another senior developer to be.

Think about putting context into specific parts of the mono-repo/monolith in a way that a future AI (and indeed a human coder) might be able to understand the codebase.

Try different models. Each model (Gemini vs. OpenAI vs. Claude) seems to have a different way that it reasons or grounds itself. I have had quite different results based on the paths and reasoning each took.

Ultimately, I believe that in many companies larger than a startup, developers of all stripes are waiting for management to force tools on them. Of course, in many organizations, bringing tools into an enterprise environment requires legal checks, safety, and compliance. However, software engineering is changing at a breakneck pace, and you should at least keep up to date personally. Ironically, perhaps people in management, like me, are waiting for you to help us change and help you succeed.

AI assistants and Agentic AI are not going away. You need to adapt to use them; do not wait until they seemingly adapt to you. The next generation of coders and technical experts is already waiting around the corner; your deep knowledge is required to guide the usage of AI in the correct directions. Every technology practitioner should be mindful of being dragged or forced into change; instead, own the change, strive to adapt, and thrive in meeting the challenge.